Coincidence this subject came up here when I just read this last week:

Announcement

Collapse

No announcement yet.

Self-driving cars- the future or just another shiny toy?

Collapse

X

-

I work at a Tesla certified body shop

I fix these self driving cars just as much as the human operated vehicles.

I think there is a lot more work that needs to be done before we are in the Jetsons era

Leave a comment:

-

I know its off topic, but high volume traffic is a trigger for me. The general population just cant do it right and it would be a huge boon for everyone if there was a way for vehicles to navigate clogged interstates on their own. With the near zero presence of cars with manual transmissions the majority of people cant coast or slow down without showing brake lights. Every time brake lights show the problem turns into the maddening stop, go, go too fast, brake hard and stop.Originally posted by AndrewBird View PostI'm all for the type of autonomous driving that is basically cruise control with steering and braking as well. Would make commuting and stop and go traffic much nicer.

Leave a comment:

-

Drafting at 90mph, on a train of 4-5 cars 3 feet off each other's bumpers - I wonder what kind of gas/power that would save.Originally posted by AndrewBird View PostI'm all for the type of autonomous driving that is basically cruise control with steering and braking as well. Would make commuting and stop and go traffic much nicer.

Apparently it works for NASCAR :)

Leave a comment:

-

I work in the industry and autonomous vehicles are closer to reality than most of these forum post lead you to believe. I love wrenching and driving my non- automated vehicles as much as the majority of R3vlimited forum members but don’t be naive as to think this technology won’t displace our preferred method of transportation in the long haul. The legal/common welfare issues will eventually get sorted. It’s not as sci-fi as some of these posts would imply.

Leave a comment:

-

I'm all for the type of autonomous driving that is basically cruise control with steering and braking as well. Would make commuting and stop and go traffic much nicer.

If we do go to completely autonomous, like, no steering wheel, I think they should be more like cabs, not personally owned. You call one to pick you up and it takes you where you want to go. Then the liability is on the company, not the passenger.

Being able to have all the cars talk to each other would be a great idea, even if not autonomous. Imagine being able to have the car avoid a crash because of info it got from all the other cars around it.

Leave a comment:

-

Years ago i remember seeing an interview with an engineer working on autonomous cars, and he was basically saying he can program it to do whatever we want, but its not the engineers decision as to what the car does in these decisions. It would be very interesting to see how this is handled these days in the cars we have. Are these hard decisions sitting at the CEO level? is Elon Musk signing off on the decision matrix for his cars? Probably. Should we be leaving it up to one guy to make decisions like this? probably not.

Leave a comment:

-

I totally agree. The ethical considerations are a side of this technology that is not shown in the marketing campaigns and it's no wonder why - it's a tricky question that nobody can give an unambiguous answer to. I'm also worried about the security shortcomings of autonomous vehicles. I hope automotive producers address this as a priority. Whom to prioritize in the case of an inevitable accident is also quite a valid point which they might want to agree upon.Originally posted by e30davie View PostI think the big issue is the idea of programming morality into these cars. Often derived from the trolley problem thought experiment.

If a person needs to make a decision between hitting a granny with a puppy or two kids on bikes, assuming there was no other choices that a reasonable person could have made then we don't generally hold the person to a decision that was made under less than ideal circumstances.

But with self driving cars, this decision needs to be programmed in. So assuming that the hazards are identified correctly (another issue, false positives...) , who gets to decide who dies? the other scenario that comes up in discussions is whether the vehicle protects the occupants or protects people outside the car. Ie if the decision is between hitting a wall and killing the occupants or hitting a pedestrian and killing the pedestrian with the occupants surviving. The computer in the car has (or will have) the ability to identify these risks, and it needs to make a preprogrammed decision. And will some companies prioritise the occupants, and other companies priorities the pedestrian? will one company advertise "we prioritise the occupants in our software" ?

I've no doubt the technology will be here and affordable before we know it, but technology is the easy bit.

Leave a comment:

-

Yea, and that arpanet never went anywhere! :)Originally posted by varg View Postself driving cars are currently a joke and a long way from being a widespread thing.

I think if you had followed the work that Stanford did during the DARPA challenge(Sebastian Thrun) and realise where things are today, you would see things in a different light. That work was started in 2004 - FIFTEEN YEARS AGO - in technology terms, that's a forever ago. The state of things today is well beyond what you paint it to be frank.

Leave a comment:

-

Heady philosophy and thought experiments aside, self driving cars are currently a joke and a long way from being a widespread thing. People really underestimate the complexity of driving and overestimate how bad people are at it. Driving is not just the simple preprogrammed obstacle course task but something dynamic that requires observation and assessment of intent of drivers, pedestrians etc. to do well. You have to start from scratch to make a good self driving car, there is nothing common sense to a computer, there is nothing it knows how to do already. Even the perception of the information the sensors provide has to be sorted, no assumptions can be made. There does not currently exist any system that can look at a common scene and identify things happening in it like a person can.

Furthermore, things like Tesla "Autopilot" are a crude gimmick that is a liability more than a useful thing, and it's baffling to me that the company chose to market it under the name "Autopilot" given the implications of that term. Name it "Autopilot" and people will treat it as such, and they do, and bad things have happened because of it. With Tesla in particular (and other Musk operations to some extent) here seems to be a cavalier attitude to the tasks at hand behind the operation of the company. It's inevitable that given Tesla's software developer style approach of testing in situ and regular automatic updating to complex and dangerous tasks with real world consequences that some serious problem that would have been avoidable through extensive real world testing instead of using the end user as a guinea pig will occur. Maybe the resultant lawsuit will humble the cocksure silicon valley attitude, since it will hit them where it hurt$.Last edited by varg; 07-12-2019, 08:20 AM.

Leave a comment:

-

The trolley problem is massively, massively overblown. It’s a non-issue that people obsess over. They are going to program these things to minimize collisions as much as possible, and they are going to avoid getting into a logic loop that advocates killing the passengers. Not least because a vehicle programmed to kill the passengers in certain circumstances is going to do it erroneously.Originally posted by e30davie View PostI think the big issue is the idea of programming morality into these cars. Often derived from the trolley problem thought experiment.

If a person needs to make a decision between hitting a granny with a puppy or two kids on bikes, assuming there was no other choices that a reasonable person could have made then we don't generally hold the person to a decision that was made under less than ideal circumstances.

But with self driving cars, this decision needs to be programmed in. So assuming that the hazards are identified correctly (another issue, false positives...) , who gets to decide who dies? the other scenario that comes up in discussions is whether the vehicle protects the occupants or protects people outside the car. Ie if the decision is between hitting a wall and killing the occupants or hitting a pedestrian and killing the pedestrian with the occupants surviving. The computer in the car has (or will have) the ability to identify these risks, and it needs to make a preprogrammed decision. And will some companies prioritise the occupants, and other companies priorities the pedestrian? will one company advertise "we prioritise the occupants in our software" ?

I've no doubt the technology will be here and affordable before we know it, but technology is the easy bit.

The real issue is that we are a LONG way from self driving AI being able to effectively read the road / road conditions / other vehicles / and especially stationary objects correctly.

Leave a comment:

-

The trolley problem is something that's brought up about autonomous cars a lot. The big difference is that a trolley is on rails, and a car is not. There will probably be a maximum that the car will be programmed to swerve, driven by some probability of remaining in control. If that is exceeded, it will likely be programmed to brake in a straight line to maximize braking performance and control of the vehicle.Originally posted by e30davie View PostI think the big issue is the idea of programming morality into these cars. Often derived from the trolley problem thought experiment.

If a person needs to make a decision between hitting a granny with a puppy or two kids on bikes, assuming there was no other choices that a reasonable person could have made then we don't generally hold the person to a decision that was made under less than ideal circumstances.

Maximizing system performance has the potential to somewhat avoid tricky catch-22 ethical problems for these companies.

I'm honestly looking forward to them because a lot of people can't figure out how to drive. Less road rage, fewer accidents, and traffic flow improvements are all huge things I look forward to.

On the other hand, too many people drive already, and self driving cars would enable more people to drive, and to drive for longer distances. I know I would be willing to have a much longer work commute if I could sleep through it, so I assume that is the same with a lot of people. Pretty much the opposite of the direction we should be heading as far as traffic/pollution goes, although maybe electric cars will offset some of that.

Leave a comment:

-

i cant wait for the day when im on some old twisty back road and i hit the spirited driving button and lay back while my car starts hugging the turns. i wonder if theyll sell retrofit kits so our e30s can stay on the road. maybe one day ill have to pretend im texting when i pass a cop so he doesnt realize im actually controlling the car.

Leave a comment:

-

All valid points, and thank you for bring it up in a way people might have to stop and think about. I would guess this will all get settled in the courts in not to distant future. While that is happening (2-4 years?) the tech will march on.Originally posted by e30davie View PostI think the big issue is the idea of programming morality into these cars.

The stats of self driving car miles vs Human driving car miles will accumulate. It will be clear as day that you're more likely to be killed by a human driver then a computer driver.

But news stories of someone being killed by an autonomous Uber/Truck/tesla/GM/volvo will dominate the new cycle. (If it bleeds, it leads is an old adage in news reporting)

The only question is how will it be sold to the public? It's a hard sell. People still think flying is dangerous - when it's the safest form of travel ever in mankind by a factor of a 10,000ish?

But you HAVE TO FLY - it's not a choce in modern life for most. But you don't have to vote (literally or with your wallet) on autonomous cars. So, that going to be the thing.

It's not about what's safter, it about what people will accept. And people don't like to be spooked. So - if Uber can "wow" you with a driverless car before it kills someone like in some tragic crash (Princess Diana would be an example of something that would be tragic - sorry to bing that up, but just as a point o reference), it will get a public nod of approval.

Leave a comment:

-

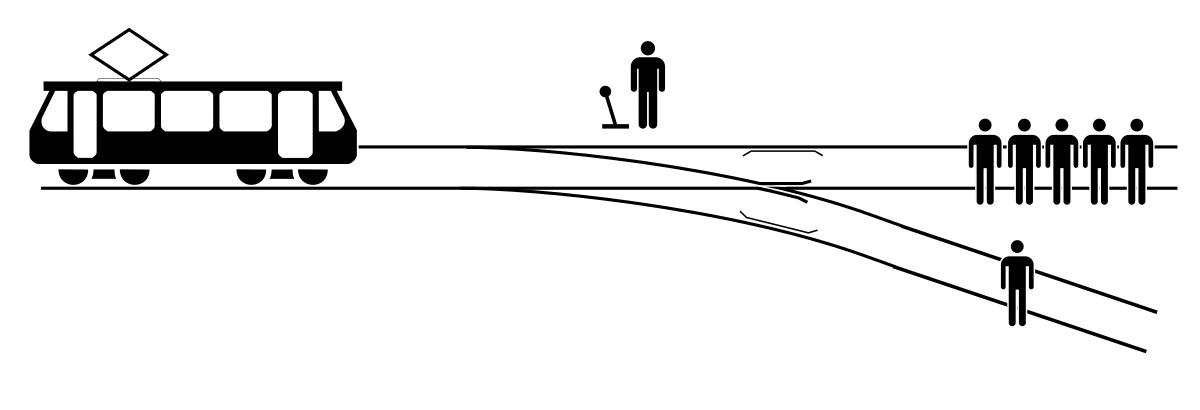

I think the big issue is the idea of programming morality into these cars. Often derived from the trolley problem thought experiment.

If a person needs to make a decision between hitting a granny with a puppy or two kids on bikes, assuming there was no other choices that a reasonable person could have made then we don't generally hold the person to a decision that was made under less than ideal circumstances.

But with self driving cars, this decision needs to be programmed in. So assuming that the hazards are identified correctly (another issue, false positives...) , who gets to decide who dies? the other scenario that comes up in discussions is whether the vehicle protects the occupants or protects people outside the car. Ie if the decision is between hitting a wall and killing the occupants or hitting a pedestrian and killing the pedestrian with the occupants surviving. The computer in the car has (or will have) the ability to identify these risks, and it needs to make a preprogrammed decision. And will some companies prioritise the occupants, and other companies priorities the pedestrian? will one company advertise "we prioritise the occupants in our software" ?

I've no doubt the technology will be here and affordable before we know it, but technology is the easy bit.Last edited by e30davie; 07-12-2019, 04:30 AM.

Leave a comment:

Leave a comment: